The Gridware Cluster Scheduler, based on Open Cluster Scheduler (formerly Sun Grid Engine), represents decades of continuous development and refinement in distributed computing resource management. With its origins tracing back to the early days of grid computing, this scheduler has evolved to handle an incredibly diverse range of use cases – from small research clusters to massive enterprise computing environments with thousands of nodes.

This mature foundation means the scheduler has been battle-tested across virtually every conceivable workload scenario: high-throughput computing, parallel MPI jobs, GPU workloads, containerized applications, and complex workflow dependencies. The priority system we’ll explore in this post is the result of years of optimization to balance fairness, efficiency, and flexibility.

The Gridware Cluster Scheduler uses a sophisticated priority calculation system to determine the order in which jobs are scheduled and allocated resources. This blog post provides a comprehensive yet easy-to-understand guide to the job priority configuration hierarchy and how various policies interact to calculate final job priorities.

Overview of the Priority System

The scheduler combines multiple policy components through a weighting system to calculate a final priority value for each job. In most cluster environments, three fundamental policies drive the priority calculation process:

-

- Share Tree Policy – Manages fair resource distribution

- Functional Policy – Implements organizational priorities

- Override Policy – Provides manual priority control

The Priority Calculation Hierarchy

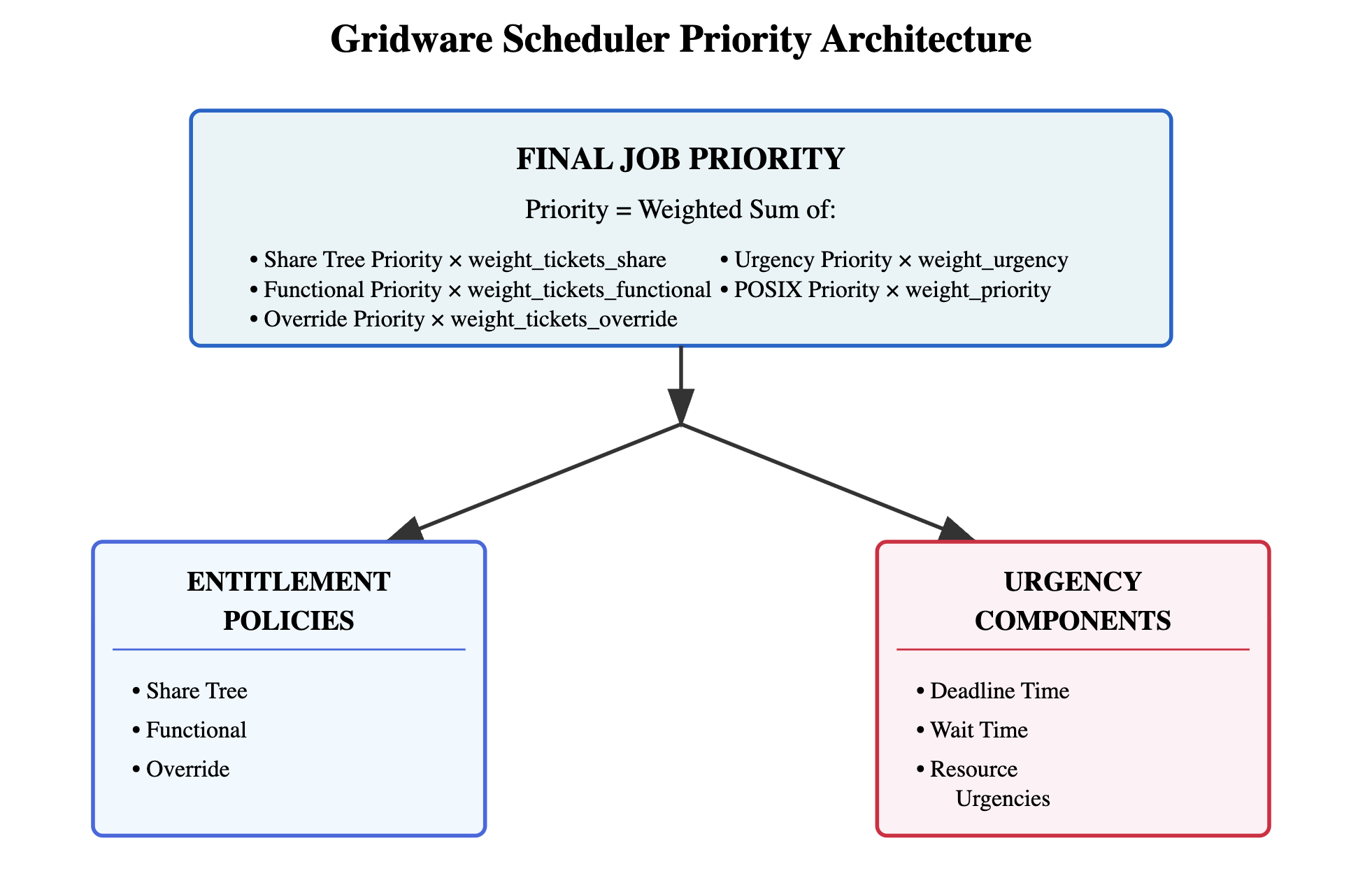

Overall Priority Architecture

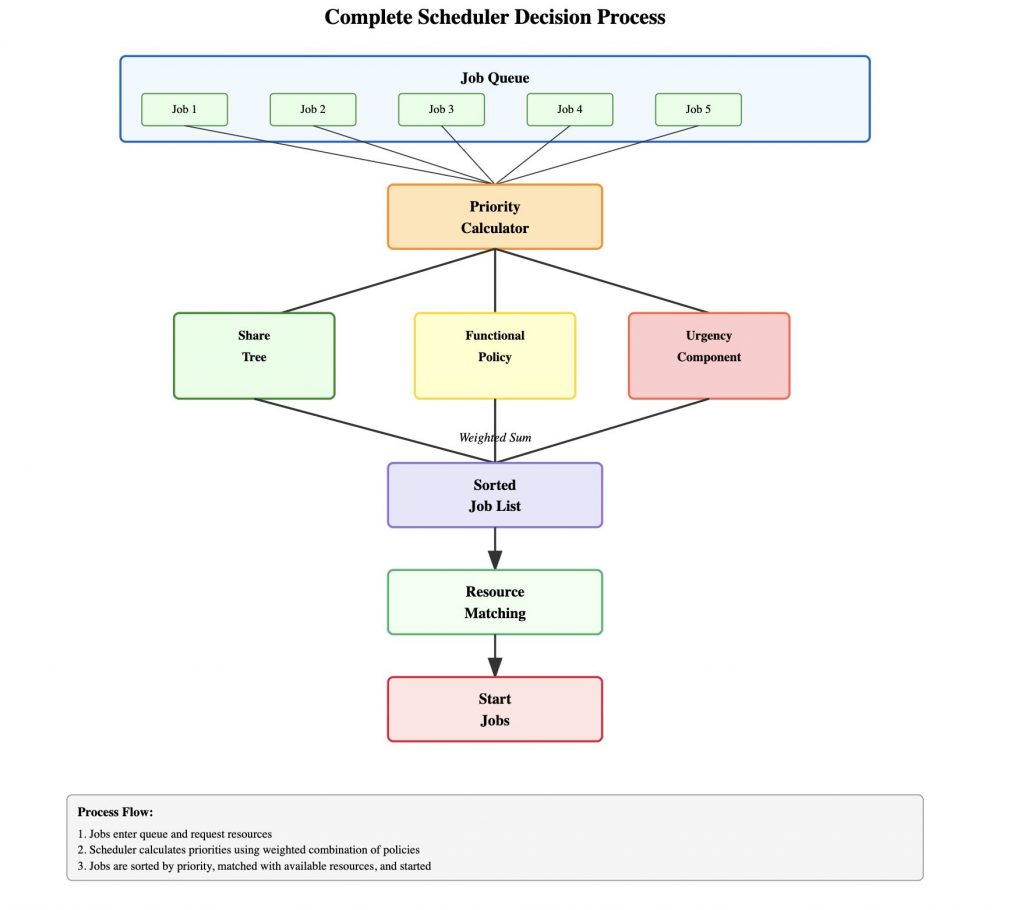

The scheduler combines multiple policy components through a weighting system to calculate a final priority value for each job. The fundamental structure shows how entitlement policies (Share Tree, Functional, Override) and urgency components (Deadline Time, Wait Time, Resource Urgencies) are weighted and combined to produce the final job priority.

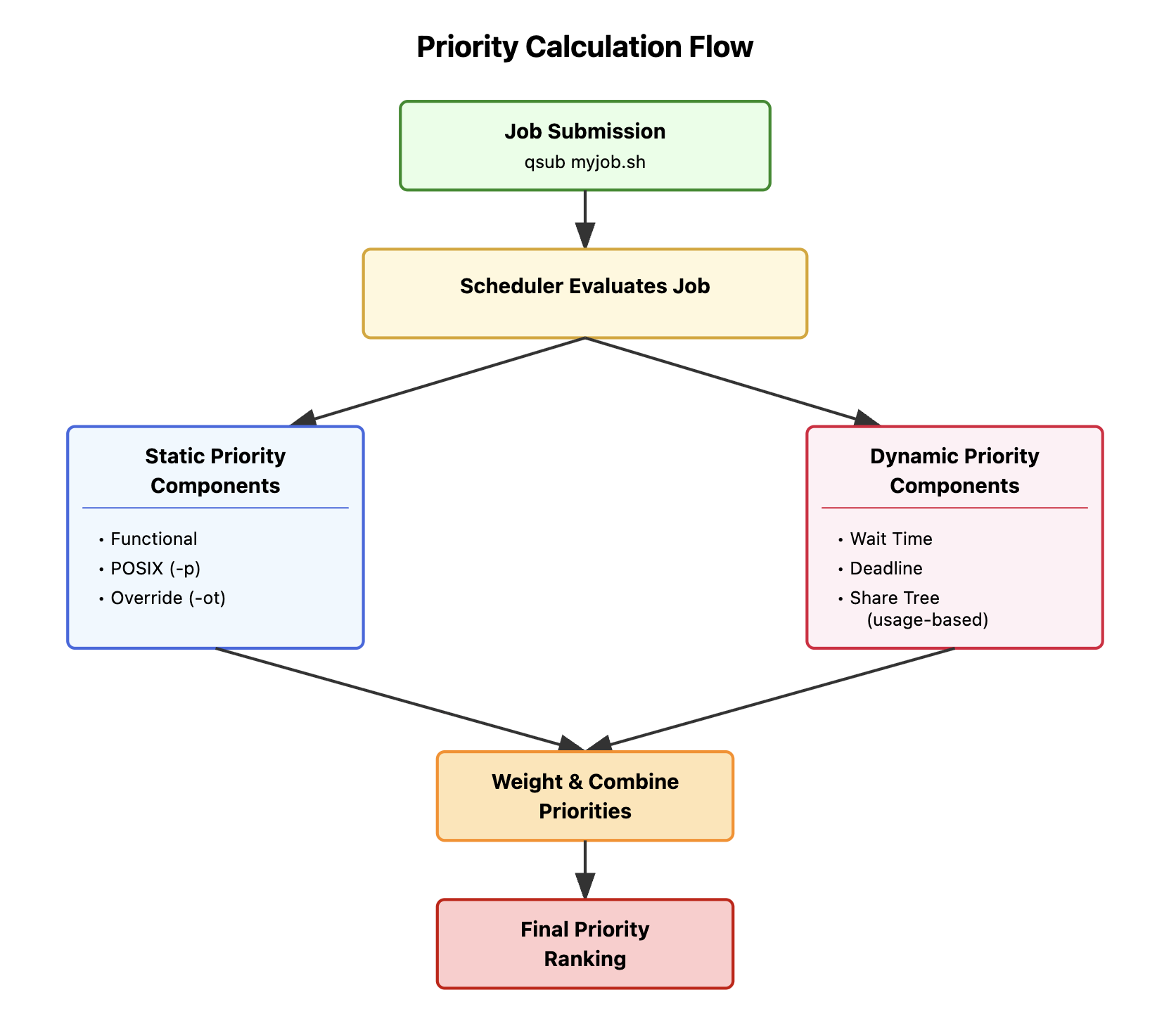

This flow diagram illustrates the complete process from job submission through final priority ranking. Static components (Functional, POSIX, Override) remain constant, while dynamic components (Wait Time, Deadline, Share Tree usage) change over time.

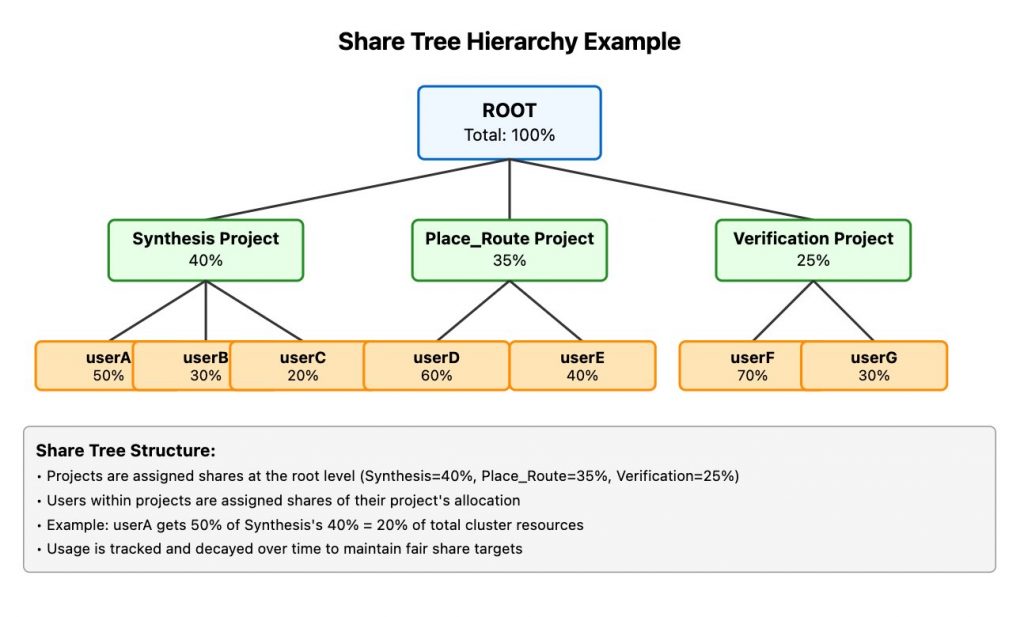

In this example, the share tree enables hierarchical resource allocation where EDA projects receive proportional cluster resources based on configured absolute share values, and users within each project share that project’s allocation proportionally. To achieve a 40% allocation for Synthesis Project, 35% for Place Route Project, and 25% for Verification Project, you could configure values like 40, 35, and 25 respectively (or 400, 350, and 250)—the system calculates relative percentages by dividing each value by the total sum.

Policy Components in Detail

1. Share Tree Policy

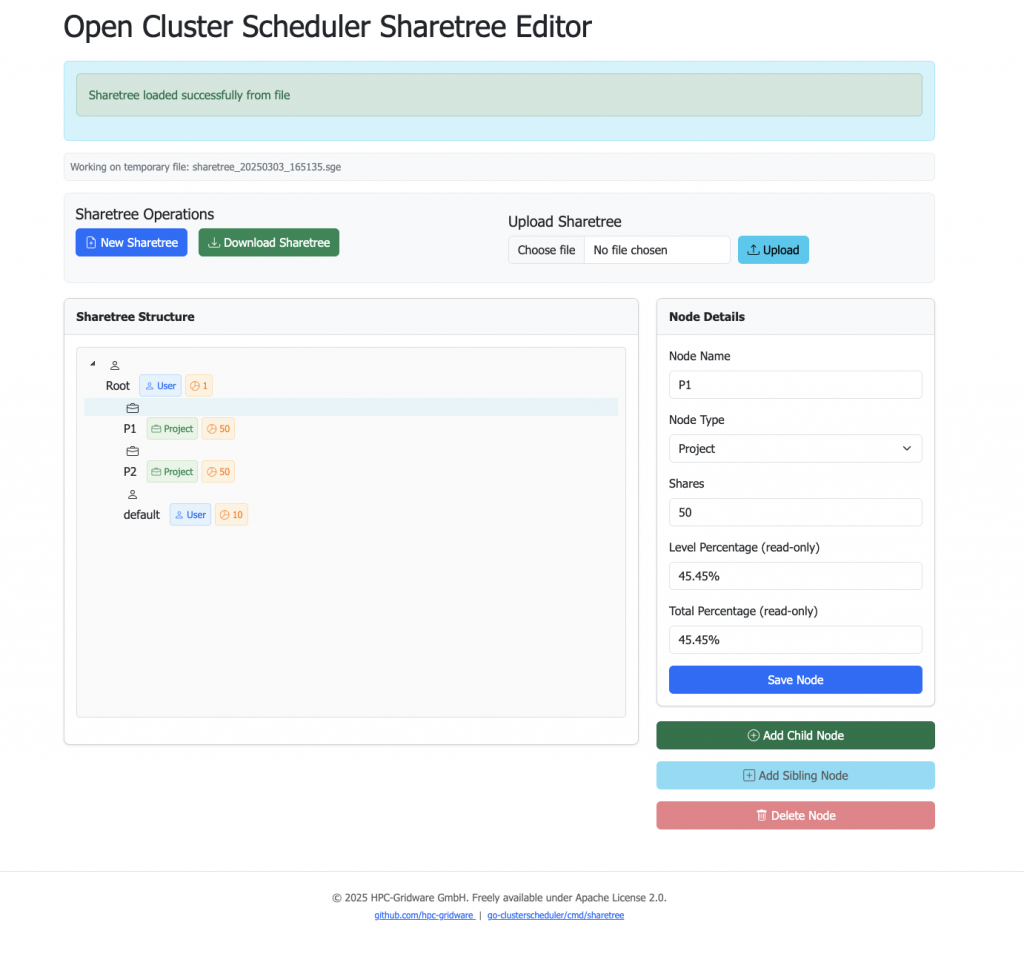

The Share Tree Policy ensures fair distribution of resources based on configured shares. It tracks historical resource usage and adjusts priorities to maintain the desired resource allocation ratios. Note that the amount of shares are assigned and not percentage values. For simplified configuration and visualization we provide an open source share tree editor.

Key Concepts:

-

- Shares: Numerical values representing resource entitlements

-

- Usage Decay: Historical usage gradually decreases over time

-

- Hierarchical Structure: Users and projects organized in a tree structure

Example Configuration:

qconf -sst

ROOT=1

synthesis=4000

usera=500

userb=300

userc=200

placeroute=3500

userd=600

usere=400

verification=2500

userf=700

userg=300

2. Functional Policy

The Functional Policy assigns static priorities based on user, project, department attributes. This allows organizations to implement business priorities.

Configuration Elements:

-

- User-based weights: Individual user priorities

-

- Project-based weights: Project-level priorities

-

- Department weights: Organizational unit priorities

Priority Calculation:

Functional Priority = weight_user × user_tickets +

weight_project × project_tickets +

weight_department × dept_tickets +

weight_job × job_tickets3. Override Policy

The Override Policy allows administrators to manually set ticket values that override calculated priorities. This is useful for urgent jobs or special circumstances. The override tickets can be granted for jobs but also for users. Additionally the administrator can configure if the granted tickets should be shared for a category (share_override_tickets setting).

Usage Example:

# Set override tickets for a specific job

qalter -ot 1000 <job_id>

# Check priority of waiting jobs

qstat -ext4. Urgency Components

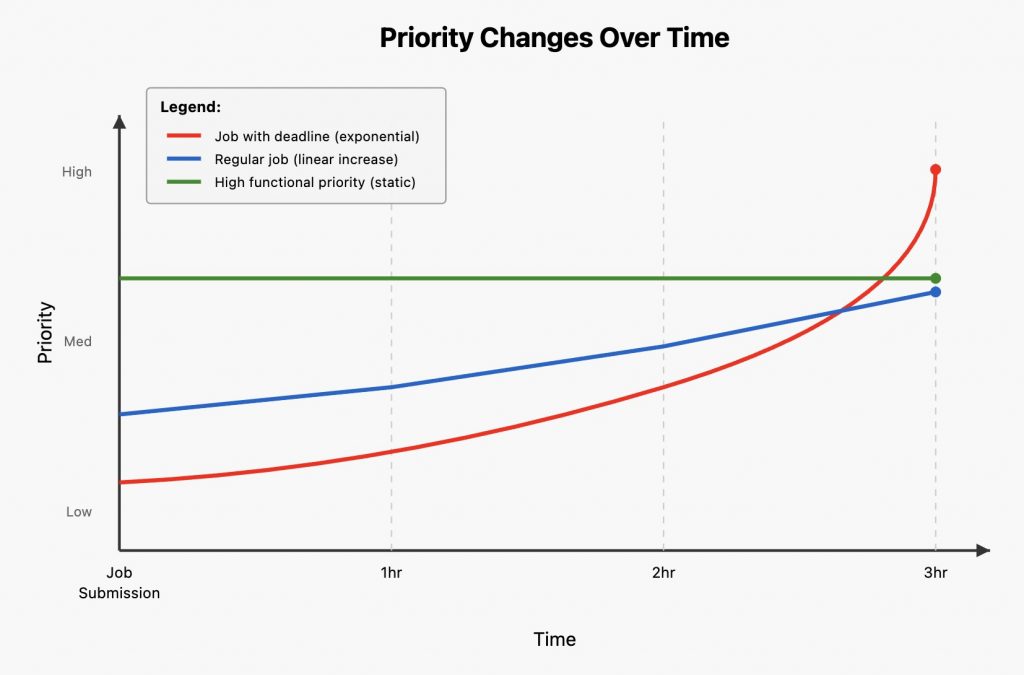

Urgency increases dynamically based on job waiting time and approaching deadlines:

Deadline Urgency:

-

- Increases as jobs approach their deadline

- Formula:

weight_deadline / free time in seconds until deadline

Wait Time Urgency:

-

- Increases the longer a job waits in queue

- Prevents job starvation

- Formula:

weight_waiting_time * jobs waiting time in seconds

Resource Urgency:

-

- Based on requested resources

- Jobs requesting expensive resources gain priority

5. POSIX Priority

Traditional UNIX nice values (-20 to +19) mapped to the scheduler’s priority system:

-

- Range: -1023 to +1024

- Default: 0

- Formula:

qsub -p <priority>

Scheduler Configuration Parameters

Key parameters that control the priority calculation (qconf -msconf / qconf -ssconf)

weight_ticket: 0.0100000 # Overall weight of ticket policies

weight_tickets_functional: 0 # Weight for functional policy

weight_tickets_share: 0 # Weight for share tree policy

weight_urgency: 0.1000 # Weight for urgency

weight_priority: 1.0000 # Weight for POSIX priority

weight_tickets_override: 50000 # Weight for override tickets

weight_waiting_time: 0.0000 # Weight for waiting time

weight_deadline: 3600000.000000 # Weight for deadline

Practical Examples

Priority Calculation Example

This concrete example shows how different priority components are weighted and combined to produce a final priority value.

Example 1: Basic Priority

# Submit job with POSIX priority

qsub -p 100 simulation.sh

# Check job priority components

qstat -j job_id

# Check the priority of the waiting jobs (running jobs have 0 priority)qstat -ext

Example 2: Inspecting Department-Based Priorities

# Functional policy configuration

qconf -su defaultdepartment

fshare 100

...

qconf -su research_dept

fshare 200

...

# Jobs from research_dept will have higher functional priority

Example 3: Emergency Job Handling

# Submit a job

qsub urgent_analysis.sh

# Modify job to make it urgent by assigning override tickets

qalter -ot 10000 123

Best Practises

This diagram shows the entire flow from job queue through priority calculation, resource matching, and job execution. Understanding this process helps administrators optimize scheduler configuration. For more information please consult the man pages, Gridware Cluster Scheduler Administrator Guide, or reach out to us directly.

Policy Balance Guidelines

-

- Balance Policy Weights: Start with default weights and adjust based on organizational needs

- Monitor Share Tree: Regularly check usage patterns with

qacctcommands. Use sge_share_mon utility (in utilbin/<arch>) to monitor the share tree. Latest Gridware Cluster Scheduler versions have a sge_share_mon man page. - Document Policies: Maintain clear documentation of priority configurations

- Test Changes: Use test queues before implementing priority changes

- Regular Reviews: Periodically review and adjust policies based on usage patterns

Conclusion

The Gridware Cluster Scheduler’s priority system provides flexible and powerful mechanisms for managing job scheduling in complex environments. By understanding how share tree, functional, override, urgency, and POSIX priorities interact, administrators can configure the scheduler to meet specific organizational requirements while ensuring fair resource allocation.

The key to successful priority configuration is finding the right balance between different policies and regularly monitoring the system to ensure it continues to meet evolving needs. Start with conservative weight values and adjust based on observed behavior and user feedback.

Discover More

To learn more about how Gridware can revolutionize your HPC and AI workloads, contact us for a personalized consultation at dgruber@hpc-gridware.com.

Appendix:

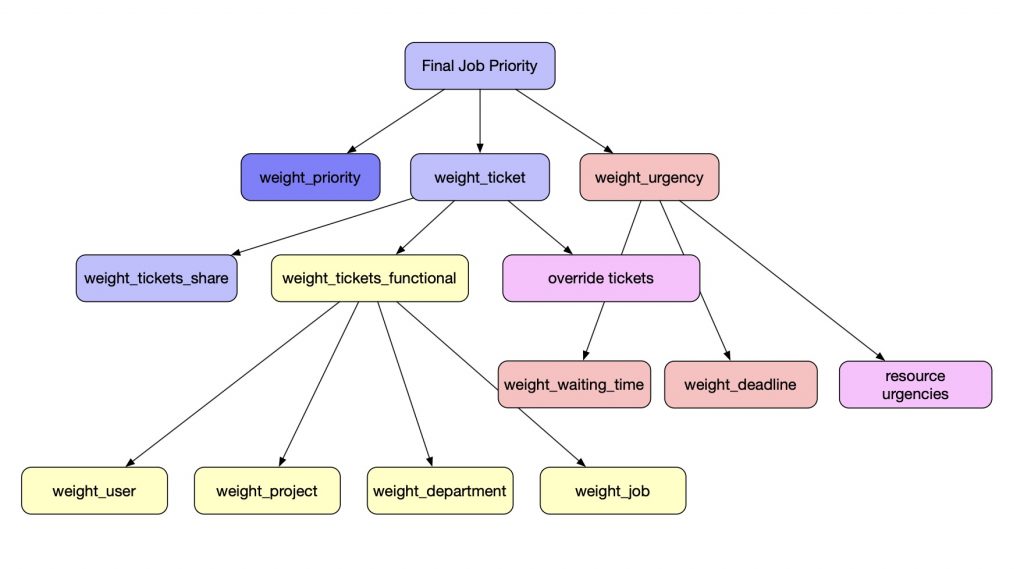

The scheduler configuration output (qconf -ssconf) displays all weight parameters in a “flat” format that can obscure how the policies are actually combined through weighting factors. Understanding this tree structure shows how setting parent weights to zero effectively “switches off” entire branches.

Weight Configuration Tree

Scheduler configuration weights